Many businesses hear GenAI and think immediately of ChatGPT but, the world of open source models offers even more opportunity with more flexibility and less cost. As the name implies, open source models are a type of machine learning model whose inner workings are openly shared. This means that anyone can access details like the model's structure, its weights, biases, and other key parameters.

The release of Meta's LLaMA and LLaMA 2 models significantly boosted the popularity of open-source models. Since then, hundreds of different models have been made available to the public. Hosting these models is challenging and time consuming without the right knowledge or tools. This is what we set out to solve when building Verta’s GenAI Workbench.

In this post, we'll explore how we at Verta host open source LLMs, providing insights into our approach and methodology.

What does it mean to "host" an open source LLM?

Hosting a large language model (LLM) means operating an instance of the model using your own resources and making it accessible to your users.

Like all computer programs, LLMs need CPUs and RAM to operate. The required amount of each resource varies depending on the specific models being hosted. Additionally, most LLMs (but not all) need a GPU or another type of accelerator to achieve satisfactory performance.

To make the models accessible to others, some form of network server architecture is needed. This can be as simple as an HTTP server with a REST interface.

LLMs can now be run in a variety of environments, ranging from small IoT devices like Raspberry Pi or your laptop to GPU clusters in the cloud.

At Verta, we utilize llama.cpp, an open source project that enables LLMs to operate across a range of environments.

What is llama.cpp?

llama.cpp is an open-source project created by Georgi Gerganov from Sofia, Bulgaria. It evolved from Georgi's earlier project, whisper.cpp, which is an open-source implementation of the Whisper speech-to-text model.

Llama.cpp aims to bring model inference to less powerful, commonly available hardware, as stated in its "manifesto." Initially designed for CPU-only inference, it has expanded to support various GPU architectures, including CUDA, Apple's Metal Performance Shaders, and hybrid CPU+GPU inference. It offers advanced features like quantization to lower memory requirements and boasts high portability across macOS, Linux, Windows, BSD, and containerized environments like Docker.

Distributed under the MIT license, llama.cpp now enjoys contributions from nearly 600 developers.

The easiest way to get started with llama.cpp is to download it from git via `git clone` (The llama.cpp `README.md` has extensive details on how to build the project).

Using llama.cpp as a server

Llama.cpp provides various interaction methods, including command-line arguments, an interactive loop, and its own HTTP server implementation.

A key feature of the llama.cpp server is its compatibility with OpenAI client libraries, enabling a wide range of applications to run against locally hosted LLMs with minimal changes. This interoperability was a significant factor in our decision to use a llama.cpp-based server at Verta. It allows us to maintain a single application codebase that can integrate with both OpenAI models like ChatGPT and locally hosted models, offering a streamlined and efficient solution.

Another option is the llama-cpp-py Python bindings library, which brings most llama.cpp functionality into Python for easy integration into existing Python codebases. We chose the llama-cpp-py binding strategy because it seamlessly fits into our Python environment, allowing us to reuse our existing libraries and tooling.

This library can be used by adding `llama-cpp-py` to your project requirements.txt, Poetry file, or directly installed with pip.

Running model inference with llama.cpp

When using llama.cpp to run a model, a few key factors must be considered:

- Model Architecture Support: Llama.cpp supports over two dozen model architectures, including multi-modal models, with new architectures added regularly. This wide range of support typically ensures compatibility with most models.

- Model Conversion to GGUF: Models need to be converted into a file format called GGUF, which contains the model's weights, biases, and parameters, along with metadata about the model's structure. This format is optimized for use by the C++ program, rather than the PyTorch binary blobs or safetensors formats. Conversion scripts are provided to convert models from PyTorch and safetensor file formats to GGUF. These scripts also offer the option to quantize or reduce the precision of the model parameters during the conversion process.

- Quantization and Half-Precision: These techniques help reduce the size and RAM usage of models. In standard formats, model parameters are usually stored as 32-bit floating-point numbers, with each parameter taking up 4 bytes. Half-precision reduces this to 16 bits (2 bytes), while quantization can further reduce the information for each parameter to 8 bits or fewer.

Converting PyTorch models to GGUF

The other way to get your model data into llamaccp is by running the conversion script

- Download the original weights (parameters) for the model. This will be a directory which contains files such as `pytorch_model.bin` or `model.safetensors`.

- Ensure the `convert.py` requirements are installed by running `pip install -r requirements.txt`

- Run `python convert.py --outfile model.gguf /path/to/downloaded_model`

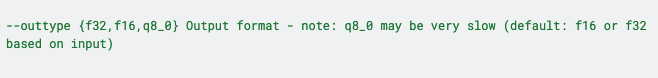

The quantization or half precision can be controlled with the `--outtype` argument:

Conversion is a CPU intensive process. If you have a machine with a lot of cores, you can increase the performance by setting the `--concurrency` parameter to the number of cores you have.

Downloading existing GGUF models

Many developers and organizations have begun to package models as GGUF as a first class file type. This removes the conversion step completely.

You can also often find GGUF versions of models on HuggingFace, from developers such as [Tom Jobbins] who run conversions and upload the results.

Taking the model for a spin

Before running the model in server mode, it can be good to interact with it directly and ensure it is working as expected.

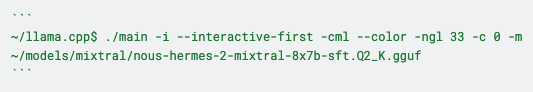

Below is an example of running llama.cpp for the [Nous Hermes 2 - Mixtral 8x7B - SFT ] model, which has been quantized down to 2 bits per parameter.

Here's a breakdown of the command line arguments:

- -i : enables interactive mode

- --interactive-first : gives the user an opportunity to write the first input, instead of the model

- -cml: enables ChatML mode, which the model has been fine-tuned with

- --color: enables colorization of user input

- -ngl 33: the number of layers to offload to the GPU. Ideally this should be all layers if your GPU has enough VRAM.

- -c 0: determine the context length from the model information

- -m: The location of the model file to use

You will see various debug messages as the model is loaded.

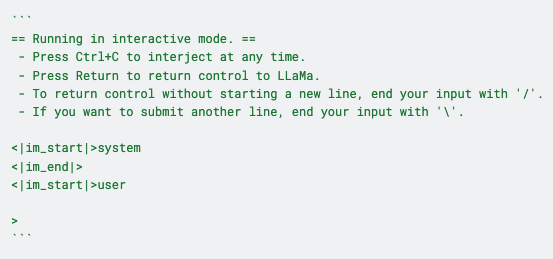

Finally, you will be presented with an interactive prompt.

At this point, you can have a conversation with your model!

Running the model in server mode

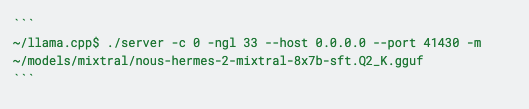

The llama.cpp server command is similar to the interactive command:

This will start an OpenAI compatible server listening on port 41430. You can now use the host and port from above to connect the OpenAI library by setting its API Base URL to the host and port above.

For example, using the Python OpenAI library there are several ways that this url can be defined:

- OPENAI_BASE_URL environment variable

- Ex: export OPENAI_BASE_URL=http://192.168.1.2:4143/v1

- OpenAI constructor base_url parameter

- Ex: openai.OpenAI(base_url=base_url, api_key="any")

- Ex: openai.OpenAI(base_url=base_url, api_key="any")

openai.base_url = “http://192.168.1.2:41430/v1” # where the ip is the external ip of the llama.cpp server

openai.api_key = "any" # server defaults to allowing any key. This can be changed with server arguments.

Efficiently using GPU resources

When utilizing a GPU with substantial VRAM or hosting multiple small models, it's feasible to co-host several models on a single GPU. The primary limitation is the total available GPU VRAM. You can operate multiple instances of the llama.cpp server, utilizing a single graphics card until the memory is fully allocated. In CUDA environments, GPU memory usage can be monitored using nvidia-smi. Alternatively, you can activate Prometheus metrics on the server with the --metrics flag for memory usage tracking.

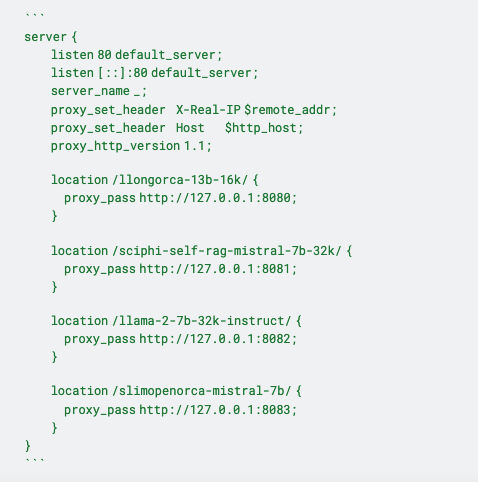

For instance, each model can be hosted on a server that listens on a distinct TCP port. A reverse proxy, such as nginx, can be configured to sit in front of this group of servers, effectively combining them into a single virtual server.

An nginx configuration can utilize proxy_pass to route requests to different models based on the request path:

Final thoughts

Llama.cpp is a versatile tool that can be utilized at every stage of LLM deployment. It's great for early-stage testing in interactive mode and can also power your Python apps that rely on OpenAI libraries.

With significant advantages like its ability to run almost anywhere, this greatly lowers the barrier to entry for those looking to get started with LLMs that aren’t just ChatGPT. If you want to see this work in action, try out our GenAI workbench at app.vert.ai.

Subscribe To Our Blog

Get the latest from Verta delivered directly to you email.