This blog post is part of our series on Serverless Inference for Machine Learning models accompanying our KubeCon 2020 talk: Serverless for ML Inference on Kubernetes: Panacea or Folly? - Manasi Vartak, Verta Inc. Not able to join us at Kubecon? Tune in to our live broadcast on December 2 @ 2 PM ET.

As builders of an MLOps platform, we often get asked whether serverless is the right compute architecture to deploy models. The cost savings touted by serverless seem extremely appealing for ML workloads as for other traditional workloads. However, the special requirements of ML models as related to hardware and resources can cause impediments to using serverless architectures. To provide the best solution to our customers, we ran extensive benchmarking to compare serverless to traditional computing for inference workloads. In particular, we evaluated inference workloads on different systems including AWS Lambda, Google Cloud Run, and Verta.

In this series of posts, we cover how to deploy ML models on each of the above platforms and summarize our results in our benchmarking blog post.

- How to deploy ML models on AWS Lambda

- How to deploy ML models on Google Cloud Run

- How to deploy ML models on Verta

- Serverless for ML Inference: a benchmark study

This post talks about how to deploy models on the Verta Platform, along with the pros and cons of using this system for inference.

What is Verta?

Verta is an end-to-end platform for MLOps. It takes a model all the way from versioning it during development to packaging, deploying, and monitoring it in production. Verta is based on Kubernetes and offers the ability to run models in containers among other modes of model deployment.

Like serverless systems, with Verta, the user does not have to worry about provisioning or managing resources or infrastructure -- the Verta system does so automatically. Similarly, Verta provides autoscaling capabilities that allow the system to adapt to changing query loads. Unlike serverless systems though, Verta models are always warm, i.e., there is always at least one copy of a deployed model running and can instantly serve requests. In addition, Verta provides users the ability to finely control the hardware resources on which a model is served.

In this blog, we will describe how to run ML models on Verta. In particular, we will demonstrate how to run DistilBERT on Verta.

Deploying your Model. In 2 Steps. For real.

First off, to gain access to the Verta platform, sign up for a free trial here!

Next, once you have access to the Verta platform, set up your Python client and Verta credentials.

Deploying a model on Verta requires just two steps: (1) the user must log a model with the right interface and (2) the user can deploy it! Unlike offerings such as Lambda or Google Cloud Run, there is no need to worry about resources constraints or making your model fit into them.

Step 1: Logging a Model

Let’s go ahead and create a model class that will take our input request, apply the DistilBERT question and answer model from HuggingFace, and return predictions.

In order to serve a model, Verta requires that the model class expose __init__()and predict() methods. That’s it. Other than that, the model code can execute arbitrary logic.

Next, we register the model into Verta’s model registry in order to version our deployed models. To register a model version, we provide it a name, version id, the set of artifacts that belong to the model, and the environment (i.e., libraries required by the model such as PyTorch & HuggingFace’s transformers). Model artifacts usually include the weights file or pickle file with the serialized model.

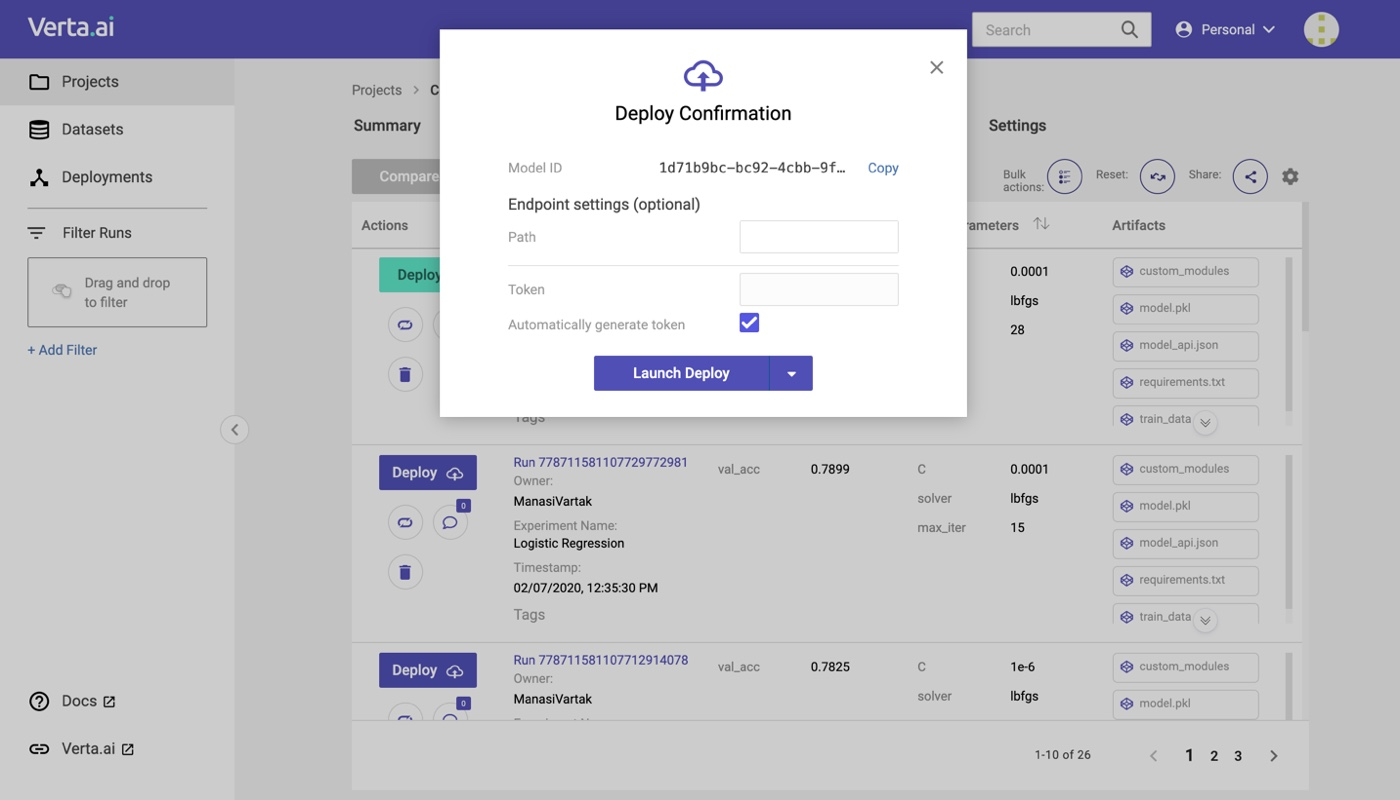

Step 2: Deployment and Predictions

That’s it. We now specify the endpoint (i.e., URL) where we would like the model to deploy and optionally specify resource requirements for the model. With that setup in place, we deploy the model and we are done!

How awesome is that?

In the background, Verta has gone off and fetched the relevant model artifacts, it has added scaffolding code and packaged the model into a server, it has created Kubernetes services and other associated elements to run and scale the model, and it has provisioned this endpoint URL. All without any code surgery, special handling of large model files, or interacting with Kubernetes directly.

Finally, now you can make predictions via the Python client, via cURL, via the REST library for your favorite language, you name it.

So should you use Verta for ML inference?

If you have been following our blog series on serverless for ML inference, you will have noticed the large amount of effort involved in deploying models on both AWS Lambda and Google Cloud Run. Whether in optimizing the model and library code to fit in the constraints of the serverless system or the intricate multi-step process, serverless platforms are not well suited for ML inference.

In contrast, a purpose-built system like Verta that abstracts away the details of the infrastructure while allowing the user full freedom to use the required amount of resources to run a model allows users to rapidly and instantly deploy models.

About the Author:

Michael Liu is a software developer with prior experience in deep learning and information processing. At Verta, he works on developing their Python client library in addition to supporting its user community with workflow examples and video walkthroughs.

About Verta:

Verta provides AI/ML model management and operations software that helps enterprise data science teams to manage inherently complex model-based products. Verta’s production-ready systems help data science and IT operations teams to focus on their strengths and rapidly bring AI/ML advances to market. Based in Palo Alto, Verta is backed by Intel Capital and General Catalyst. For more information, go to www.verta.ai or follow @VertaAI.

Subscribe To Our Blog

Get the latest from Verta delivered directly to you email.